Deep Linking & Deepfakes: Solutions And Issues Explained | Learn More

Why is the digital world increasingly shadowed by the potential for manipulation and misinformation? The concept of 'deep linking' and its more insidious cousin, the 'deep fake,' are converging to create a landscape where truth is increasingly difficult to discern, demanding heightened vigilance from everyone.

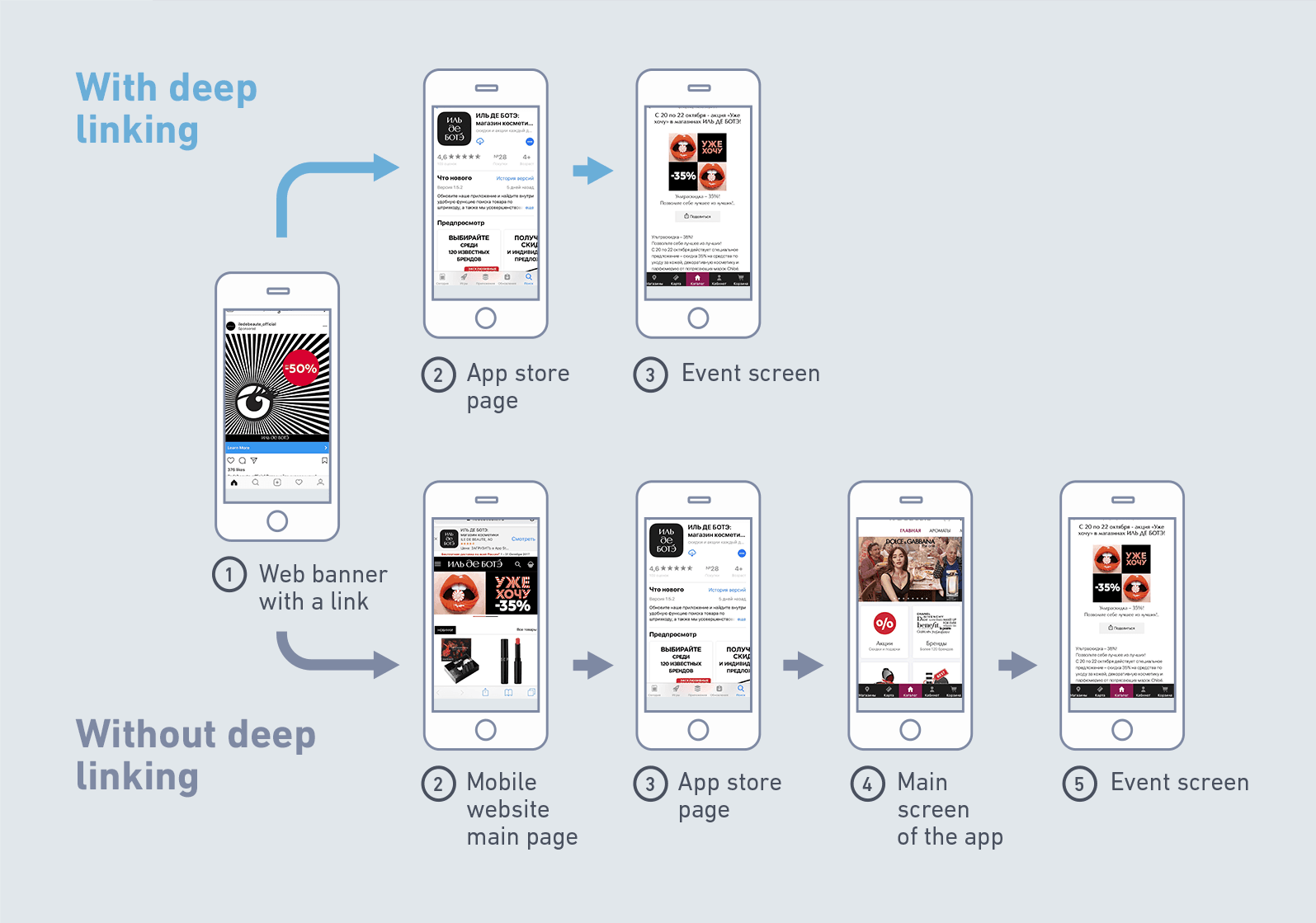

The digital age, with its unprecedented connectivity, has simultaneously birthed powerful tools for communication and dissemination, alongside equally potent instruments of deception. This duality is particularly evident in the realm of hyperlinks. A 'deep hot link,' as it's sometimes termed, provides a direct pathway to a specific piece of content within a website, bypassing the homepage and leading the user straight to the intended destination. This functionality, while a cornerstone of efficient web navigation, also paves the way for more nuanced forms of manipulation. The user, upon clicking a well-crafted link, is immediately transported to the desired information, potentially unaware of the context from which it originated or the motivations of the person or entity presenting it. This immediacy creates both convenience and vulnerability, making it easier for malicious actors to distribute misinformation or create a false narrative.

The advent of 'deep fakes' amplifies these concerns. These manipulated videos, often indistinguishable from reality, can be used to spread disinformation, damage reputations, or even incite violence. The technology underlying deep fakes is becoming increasingly sophisticated, making it harder to detect fabricated content. The ease with which these videos can be created and distributed online makes them a potent weapon in the arsenal of those seeking to sow discord or manipulate public opinion. The presence of links to such content, whether accidental or intentional, further muddies the waters, directing users towards potentially harmful material.

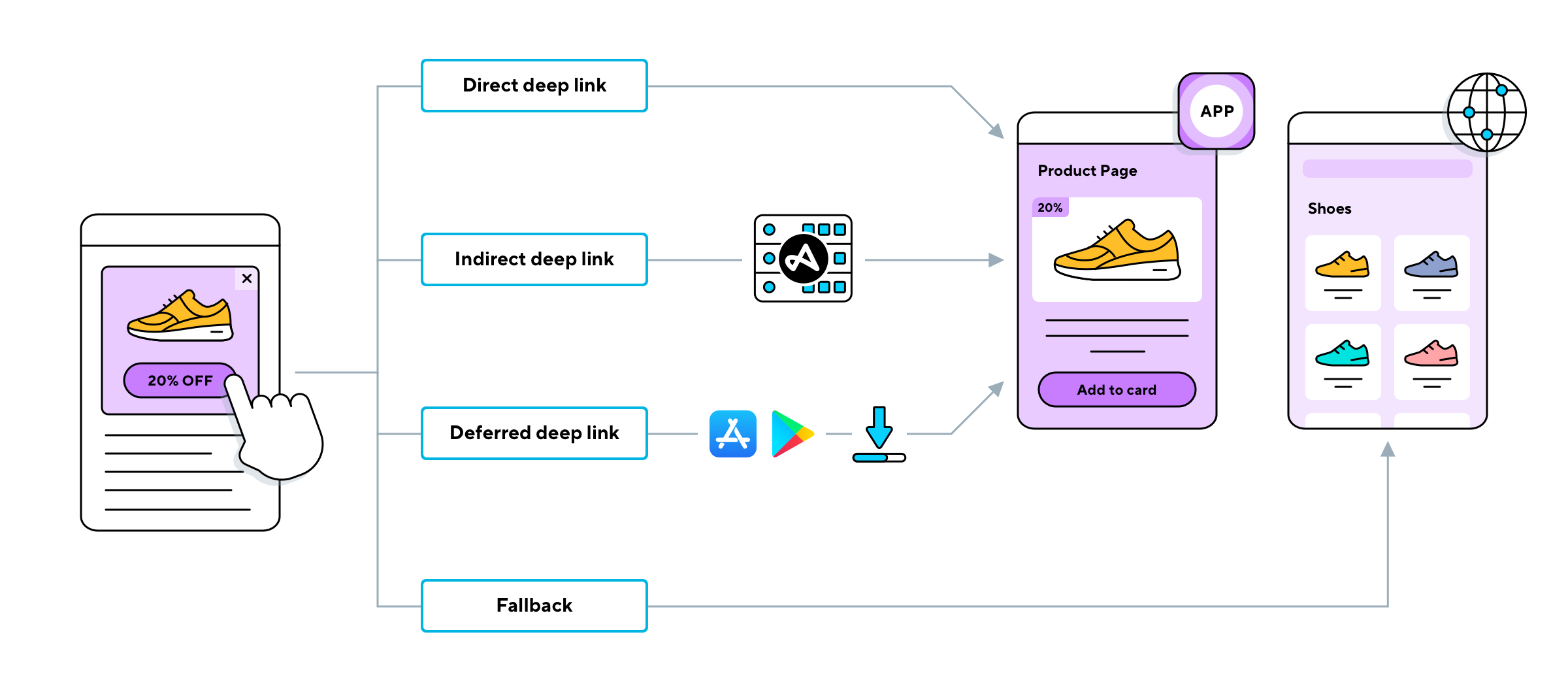

Furthermore, the concept of 'deferred deep linking' adds another layer of complexity. This technology allows users to be directed to content within a mobile application, even if the app is not yet installed on their device. When a user clicks a deferred deep link, they are first directed to the app store or play store to download the application. Once the app is installed, the link will then open the application and take the user directly to the specific content that the link was originally intended for. This functionality is useful for app developers, as it streamlines the user experience. However, it also presents a challenge for content moderation and security, as it provides another avenue for malicious actors to distribute harmful content.

Consider the actress Shruti Haasan, a prominent figure in Indian cinema. The existence of "deep fake" videos purporting to feature her, circulating online, highlight the vulnerability of public figures and the potential for malicious manipulation. These videos, often sexually suggestive or designed to defame, exploit technology to create a false representation of reality, potentially causing significant damage to the subject's reputation and well-being. The ease of finding and distributing such content underscores the need for increased vigilance and proactive measures to combat the spread of misinformation and protect individuals from harm.

The following table showcases a conceptual bio-data focusing on the potential impact of the situation on an imagined public figure. It is provided as an example, and no actual information of a specific person is present in the table:

| Bio-Data: Hypothetical Public Figure | |

|---|---|

| Name: | Anya Sharma (Fictional) |

| Profession: | Journalist, Media Personality |

| Date of Birth: | September 12, 1988 (Fictional) |

| Place of Birth: | Mumbai, India (Fictional) |

| Education: | Master's in Journalism, Columbia University (Fictional) |

| Career Highlights: |

|

| Impact of Deepfakes/Misinformation: |

|

| Professional Challenges: |

|

| Countermeasures: |

|

| Reference Website (Conceptual): | Example Legitimate News Site (Fictional) |

The proliferation of deep fakes and the ease with which 'deep links' can be used to navigate to them represent a significant threat to the integrity of information online. The potential for abuse is substantial, and the implications for individuals and society as a whole are considerable. The ability to manipulate images and videos, and the ease with which those manipulations can be distributed, raises serious questions about trust and authenticity. The public is increasingly relying on digital platforms for information, and the potential for these platforms to be used for malicious purposes is significant. This is not merely a technological problem, but a social one as well. It demands a multi-pronged approach, involving technological solutions, legal frameworks, and a greater emphasis on digital literacy.

One crucial element of tackling this challenge is improving the ability to identify fabricated content. This means developing and deploying sophisticated detection tools that can automatically flag potentially manipulated images and videos. Researchers are working on various methods, including analyzing the metadata of digital content, examining the artifacts of image and video manipulation, and using artificial intelligence to identify inconsistencies that may indicate a deep fake. Furthermore, its vital to improve the ability of the public to discern between fact and fiction. This involves educating people about the tools and techniques that are used to create deep fakes and the motivations of those who create them. Digital literacy programs can play a crucial role in empowering individuals to critically evaluate the information they encounter online. Schools, libraries, and community organizations can all contribute to fostering media literacy skills.

Another important aspect of the solution is the development of legal and ethical frameworks to address the creation and distribution of deep fakes. Currently, laws vary by jurisdiction, and there is no unified global approach. Legislation is needed to criminalize the malicious creation and distribution of deep fakes, particularly those that are designed to cause harm or incite violence. Such laws should also address the responsibility of online platforms to remove or flag content that violates these laws. Furthermore, ethical guidelines are needed for the creation and use of deep fake technology. These guidelines should promote transparency and accountability and ensure that deep fakes are used responsibly and ethically.

Beyond these measures, promoting responsible platform practices is vital. Online platforms, which are the primary vehicles for the spread of deep fakes, have a responsibility to take action. This includes implementing robust content moderation policies, developing advanced detection tools, and educating users about the risks of deep fakes. Platforms should also be transparent about their efforts to combat deep fakes, providing information about the number of deep fakes that are detected and removed, and the measures that are being taken to prevent the spread of such content. Moreover, platforms should work collaboratively with researchers, policymakers, and civil society organizations to develop and implement effective strategies for combating deep fakes.

The concept of 'deep linking,' which allows users to bypass the homepage of a website and go directly to a specific page, also presents its own set of challenges. While this can be a convenient feature for users, it can also be exploited by malicious actors to spread misinformation or direct users to harmful content. For example, a deep link could be used to direct users to a fake news article or a website that promotes hate speech. This is particularly problematic when combined with the prevalence of deep fakes, as a deep link could lead users directly to a manipulated video, further spreading disinformation.

Addressing this requires a multifaceted approach. First, websites need to implement robust security measures to prevent malicious actors from creating deep links that lead to harmful content. This could involve implementing content filtering, monitoring user activity, and using other techniques to detect and prevent the creation of malicious links. Second, users need to be educated about the risks of deep linking. They should be taught to be skeptical of links from unknown sources and to verify the authenticity of the information they encounter online. Third, search engines and social media platforms need to develop algorithms that can identify and de-rank websites that are known to spread misinformation. This would help to prevent these sites from appearing in search results and social media feeds.

Furthermore, the issue of deferred deep linking, which allows users to be directed to content within a mobile application even if the app is not yet installed, presents new challenges. The advantage is a seamless user experience, directing users to the desired content after they install the app. But, it also creates opportunities for misuse. Malicious actors could exploit this by embedding deep links in deceptive advertising or phishing campaigns. When a user clicks on such a link, they would be prompted to download the malicious app, which could then steal their personal information or install malware. To mitigate these risks, app developers need to implement robust security measures, such as verifying the authenticity of the links that they use and monitoring user activity. App stores should also implement policies to prevent malicious apps from being listed in the first place. Users, on their part, should exercise caution when clicking on links from unknown sources and should always verify the authenticity of an app before downloading it.

The rise of deep fakes and the associated issues of deep and deferred deep linking highlight a critical need for a coordinated response from governments, technology companies, and the public. This challenge transcends technological complexities; it presents an ethical, social, and legal test, demanding a multifaceted approach that prioritizes truth, security, and responsible digital citizenship. Only through constant vigilance, robust technological solutions, and widespread public awareness can we hope to navigate this complex digital landscape.